Innovations

Improved Productivity

and Performance with

Apache Spark®-based Tools

from Daman

Achieving scale and productivity with our

enterprise-grade Apache Spark® framework

Daman provides a proven framework to leverage the full potential of Spark technology

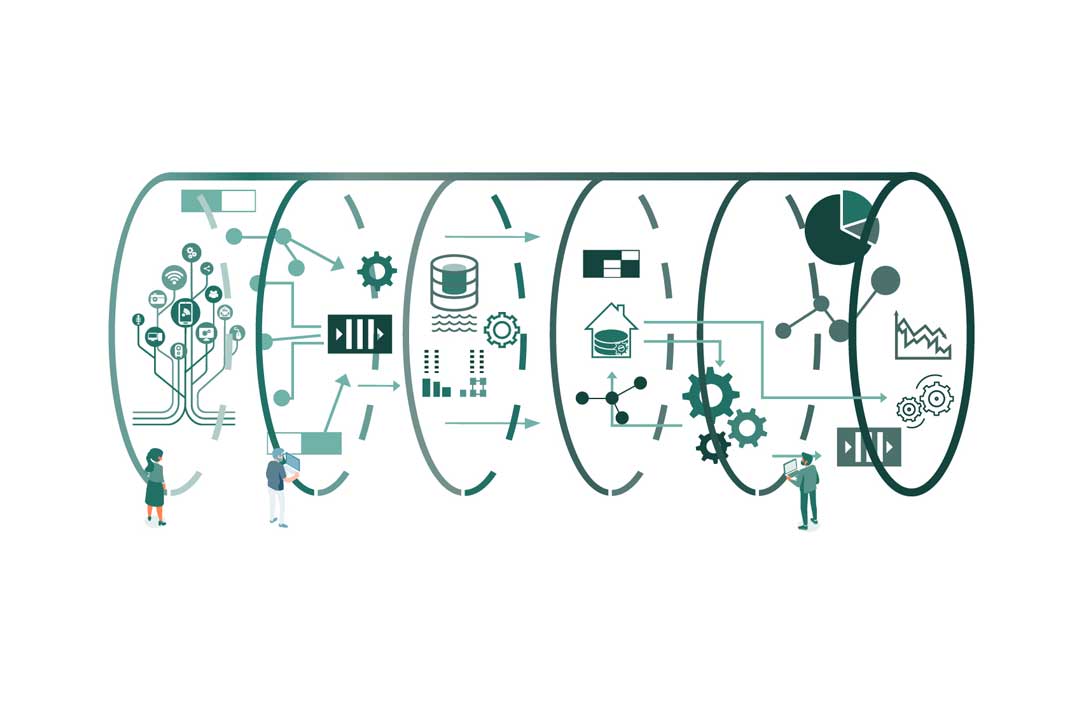

Spark is becoming the preferred technology in the world of data pipelines for data warehousing, replacing traditional extract, transform, load (ETL) tools as a means to populate modern cloud data warehouses. Spark can improve scalability, increase performance (throughput), and support a wide range of computations.

However, Spark is a complex technology, and organizations need a high level of programming skills and technical expertise to properly code, test, and maintain their Spark-based solutions. Organizations can also be challenged in managing their Spark initiatives across a variety of development teams that might follow different practices and procedures.

Daman has developed an innovative alternative

to manually building high-performance data

feeds for each business relationship

Daman provides a Spark-based ETL framework that organizations can use to build out their data pipelines in a productive, coordinated and cost-efficient manner. The Daman framework:

- includes pre-built routines that perform 80 percent of tasks typically involved in a data pipeline.

- accelerates development of data pipelines in a standardized manner and facilitates the quick operationalization and monitoring of these data pipelines.

- provides an orchestration mechanism to connect several data pipelines with dependencies, thereby creating a tightly integrated, complex processing unit.

- facilitates a standardized ETL project structure, with a template for defining ETL jobs that segregates extract and load from the transform step. This supports reusability, increases automation and greatly simplifies

Get started on a path to Self-service configurations that are smart enough to be simple!

The benefits are clear

Accelerate Development and Reduce TCO

By eliminating the need to write complex pyspark code for each ETL pipeline, delivery can be significantly accelerated. In addition, the ETL pipelines can be configuration files, thereby reducing TCO.

Integrate Data Quality Management

The Daman framework has built-in, run-to-run controls that support data quality. The framework also has a more sophisticated data validation function, supporting complex data validation capabilities.

Introduce DataOps Practices

Organizations can benefit from practices such as statistical process controls and metadata cataloging.

Optimize Performance

Functions within the framework are optimized for performance. In addition, the framework gives developers the ability to control Spark properties to optimize performance and cache dataframes.

Backed by over a quarter century of innovation and expertise, Daman can help today’s businesses take full advantage of data sharing with cost-efficient, scalable and flexible solutions designed to meet their rapidly evolving business needs.