Innovations

Accelerated Data Supply

Chains for Advanced

Analytics

Daman modernizes DataOps to democratize data access and accelerate data supply chains

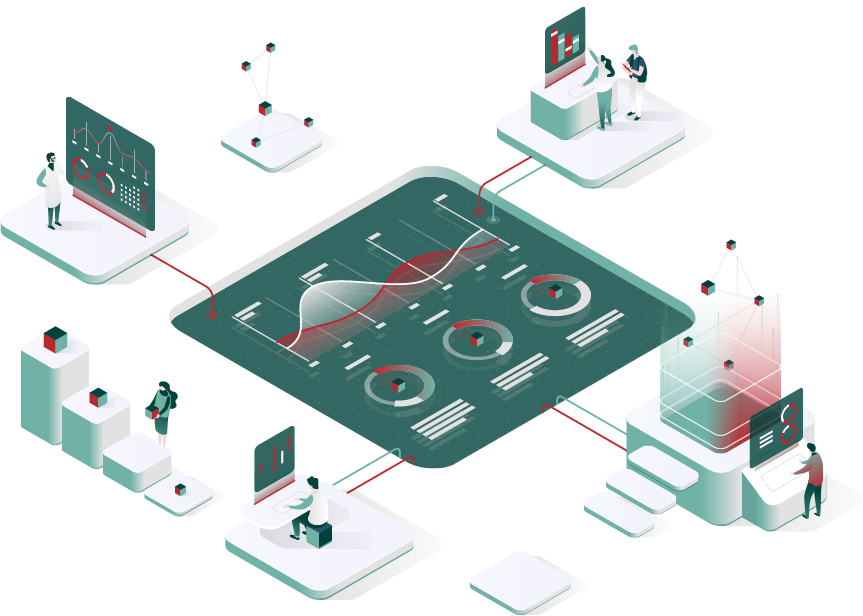

Digital Transformation is being driven by large-scale adoption of advanced analytics including machine learning and AI. Achieving this requires that organizations accelerate data delivery, model development and deployment timelines. According to recent studies, however, many data scientists and analysts spend well over half their time in the curation of data — finding, verifying, cleansing, conforming, normalizing etc. — when they really need to concentrate on developing data models and analytic artifacts to support critical analysis and decision making.

Daman’s innovative Data Provisioning

Framework delivers Data-as-a-Service (DaaS)

Learn more about smart provisioning:

- a modern data pipeline that abstracts and automates the data acquisition and data wrangling actions from data use, thereby closing the efficiency gap in the data-curation process.

- configurable solution that supports a pub-sub model delivering data in a full range of formats and frequencies.

- metadata-based code generation that lowers developer workload and shifts focus to self-service data acquisition by data scientists and analysts.

- platform-agnostic solution that future-proofs your data supply chain.

Information power brokers need powerful tools for success – get started today!

Realize the benefits

of proven innovation

Accelerate Development and Reduce TCO

By leveraging pre-defined ETL patterns and curated data sets, data scientists can reduce effort on data wrangling, while data engineers can reduce effort on developing and maintaining data pipelines.

Separate Data Wrangling from Pipeline Execution

The transformation rules are kept separate from the execution engine with a pluggable architecture to allow for use of current or future data transformation enabling technologies.

Manage Data Lineage and Data Certification

In addition to a wide variety of ETL control validations, the open architecture allows pluggable, business-centric quality and validation checks. Complemented by metadata tagging and harvesting, data entities can be certified for various uses.

Optimize Performance and Elasticity Of Data Pipelines

The framework allows for scaling up and down of resources used for concurrency and parallelism.